With the increasing complexity of autonomous functionality in both AV and ADAS systems, traditional methodologies of developing safety critical software are becoming inadequate. Since autonomous systems are designed to operate in complicated real-world driving domains, they will be expected to handle a near endless variety of possible scenarios. As new edge cases and scenarios are encountered through the vehicle development process, system requirements will need to be added and adjusted. Further, software development on new production vehicles will not stop once the vehicle is on the road, and new functionality will be expected with over-the-air updates. As a result, traceability of software quality and avoiding regressions over time is critical to ensuring safe and expected autonomous behavior.

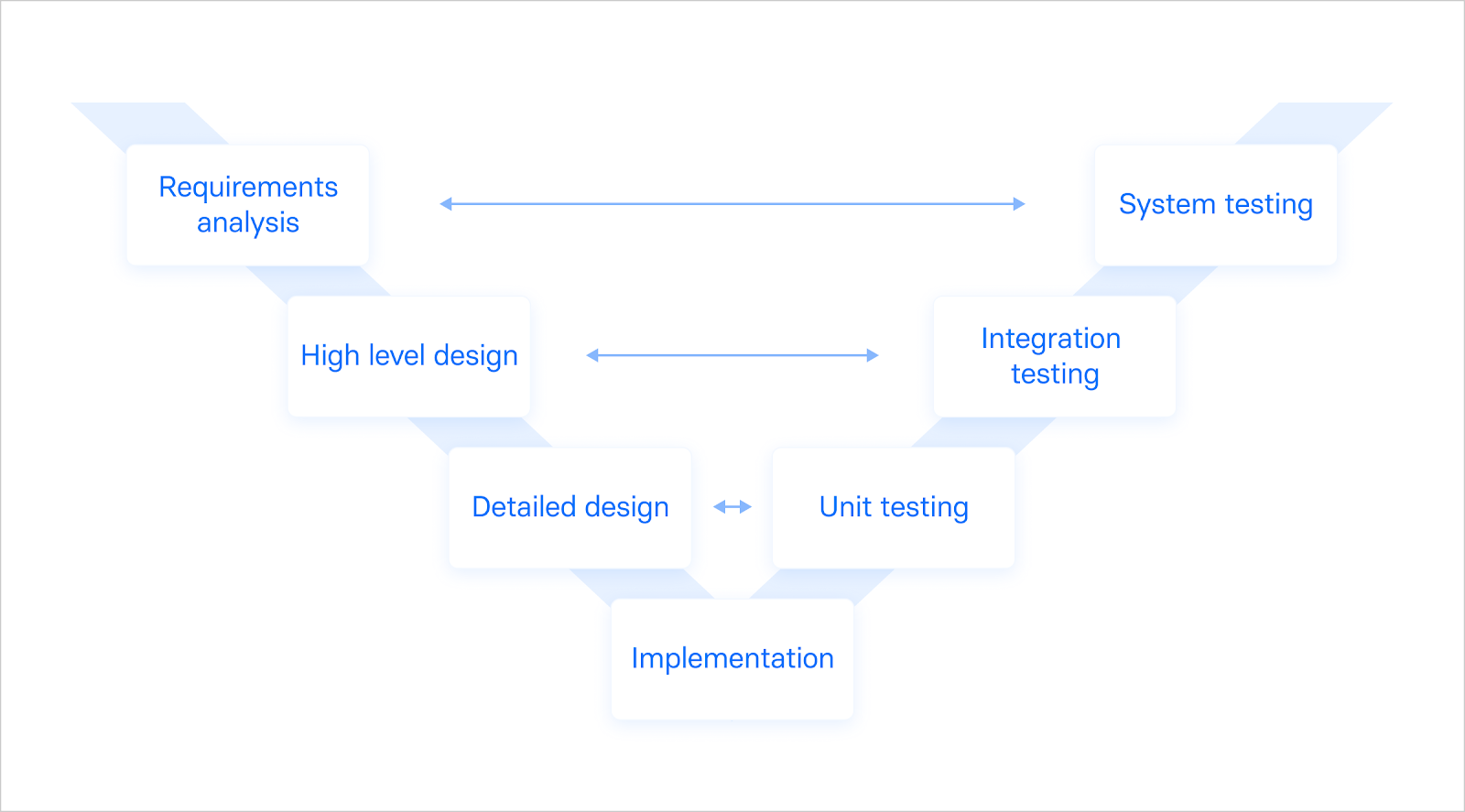

In traditional ADAS development, the V-model is often adopted to develop and assess safety of safety-critical systems (figure 1). The development process consists of defining requirements and testing the stack’s performance on overall requirements by splitting and testing each requirement from its high level design into the underlying components. Defining requirements that guarantee safety in the development of more complex autonomous systems is challenging in practice due to the proliferating number of potential scenarios. Requirements are also not static; they are adjusted as new information comes in from real-world driving encounters and the system operational design domain (ODD) broadens.

In this blog post, the Applied Intuition team looks into different approaches to defining requirements: starting with the approach adopted by the traditional system engineering teams and expanding to recent approaches by machine learning (ML)-driven AV companies. Further, the team discusses how requirements should be managed and kept updated throughout the development lifecycle.

Types of Requirements:

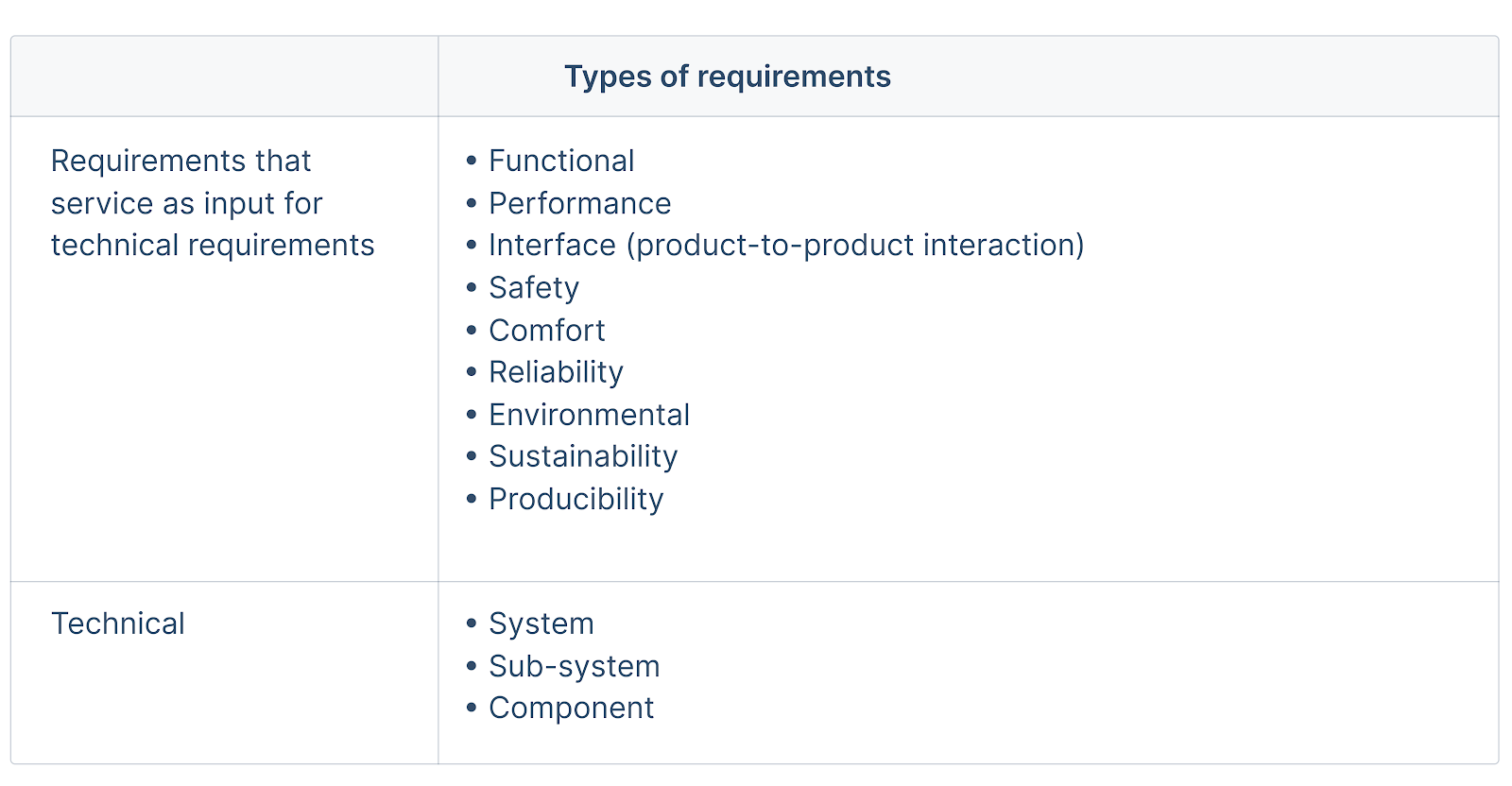

An autonomous driving system must meet expectations from various stakeholders such as the internal engineering teams, passengers, regulatory authorities, and commercial fleet operators. The system must also meet multiple types of requirements (table 1). While requirements are typically developed based on stakeholder expectations, factors such as the concept of operations, enabling support strategies, the measure of effectiveness, and the industry safety standards should also be considered. For example, the requirements should follow the safety standards such as ISO/PAS 21448 (or SOTIF) and ISO 26262 to produce the intended functions that could be declared safe and minimize risks in case of system and component failures, respectively.

Approaches to Defining Requirements:

Because of the demand to use (and the availability of) massive amounts of drive and simulation data to develop, verify, and validate autonomous systems, ML-driven AV programs approach requirements definition differently from the traditional system engineering teams.

A traditional approach to requirements definition:

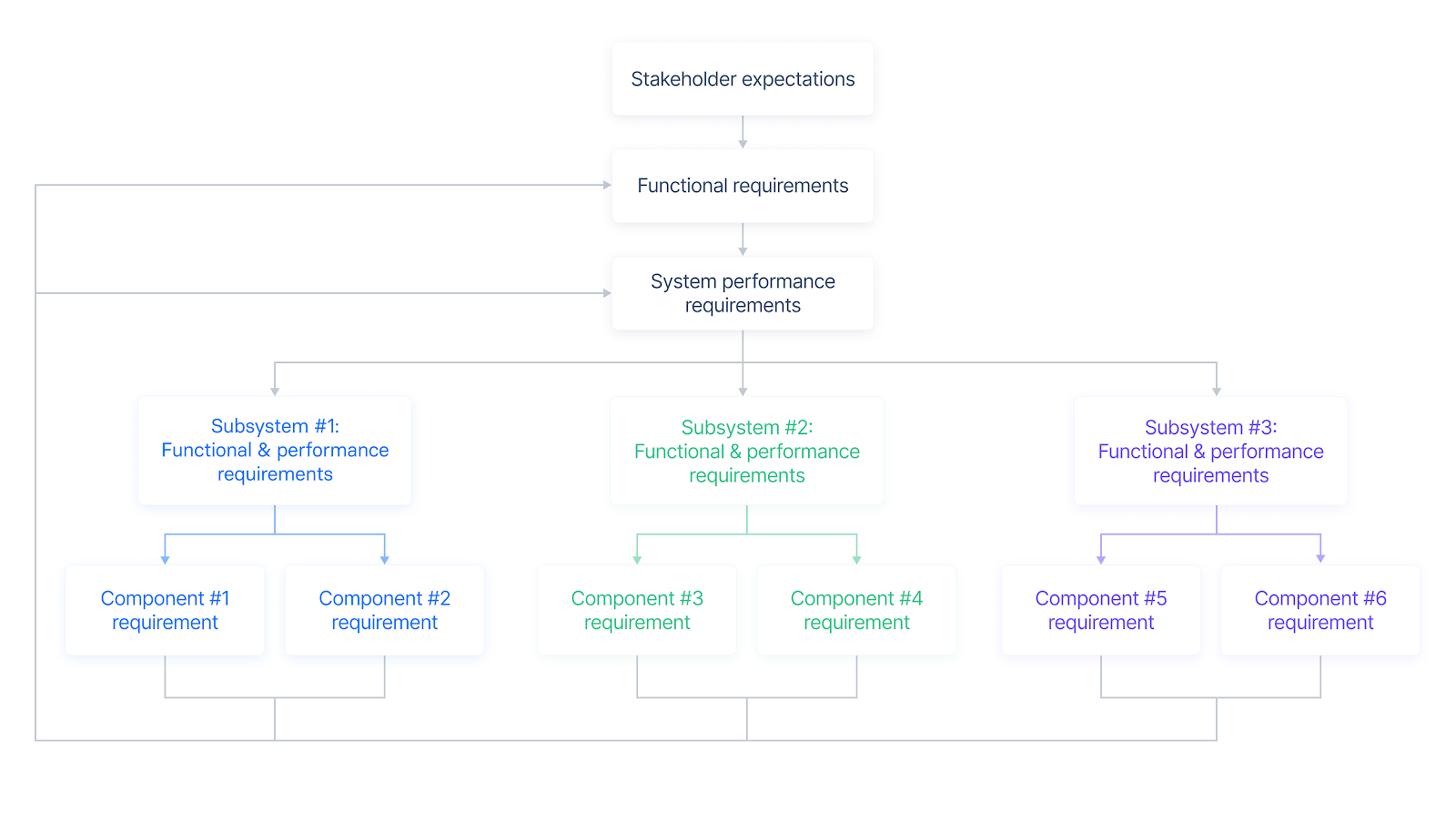

In traditional system engineering, the requirements definition process follows a hierarchical structure in which the highest level requirements are broken down into functional and performance requirements and allocated across the autonomous system, subsystems, and components (figure 2). Highest level requirements, such as the stakeholder expectations (e.g., program goals, assumptions, functional/behavioral expectations), the concept of operations (how the system will be operated during the product life cycle), and human/systems function allocation (how the hardware and software systems will interact with infrastructure/personnel) are assessed to identify technical problems and establish the design boundary. The requirements may be further defined by establishing quantifiable performance and other technical criteria.

After the detailed requirements have been established, the total set of derived requirements should be validated against the initial stakeholder expectations at each level (e.g., system, subsystem).

This approach works well for less complicated environments (narrow, controlled ODDs) where it is possible to enumerate all the possible situations to be encountered by the system under test. But when expanding to broader autonomous driving use cases, the system must navigate endless corner cases in a highly variable environment and the number of requirements and scenarios that need to be considered explodes (read more about this topic in this blog post).

So is there an alternative approach to defining requirements in broader ODDs?

How machine learning (ML)-driven companies approach requirements definition:

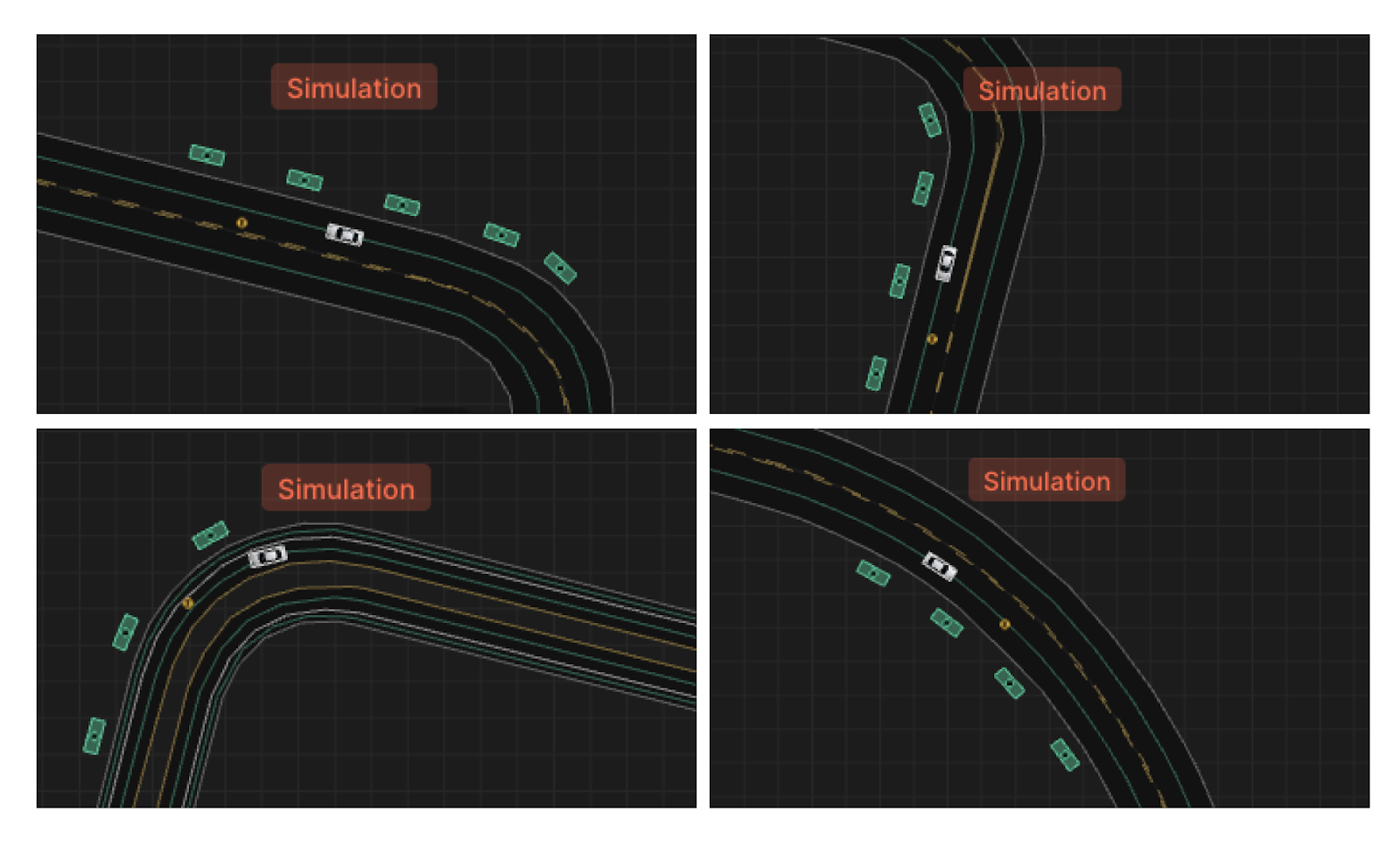

On the contrary, ML-driven AV programs define many of their requirements through rigorous, quantitative analysis of the real-world drive data. The development team first comes up with a list of measurements necessary for safe navigation in the ODD (e.g., an urban neighborhood), such as the driving speed, distance to the road curvature, deceleration, following distance, and so on (figure 3). For each metric, the team studies thousands of scenarios to find appropriate thresholds for comfort and safety. For example, to understand safe following distance, the team may analyze scenarios with a vehicle tailing another vehicle across the ODD to derive a safe distance from the leading vehicle under each set of circumstances. These quantitative results inform performance requirements.

The requirements definition process is iterated upon based on known issues experienced by the AV stack or the human drivers in the real world. For instance, even if human drivers leave an average following distance of two seconds, a study of past drive data may find that the distance does not give the trailing vehicle sufficient time to react if the leading vehicle brakes suddenly. In that case, the requirements may be adjusted to leave a safer following distance.

Once the data-based requirements are established, the development of planning algorithms and the creation of scenarios for testing in simulation happen in parallel.

Managing Requirements Throughout the Development Lifecycle:

Requirements change over the product development lifecycle as we discover new edge cases, encounter disengagements, and broaden the scope of the ODD. What are the best practices that development teams could take to manage an evolving list of requirements?

Requirements management generally involves tracking performance on requirements, assigning root causes and debugging failures, and adjusting requirements thresholds. When performing tests to verify if the system or component design meets requirements, it’s important that requirements are first translated into semantically defined scenarios. A requirement (e.g., following distance) should be linked to a functional scenario (e.g., a set of leading and tailing vehicles in an urban neighborhood), which then should expand into logical scenarios with parameter variations (e.g., road conditions, weather variations, the actor vehicle types). With parameter distributions defined, concrete scenarios (specific testable instances) may be created for simulation testing.

To track the AV stack’s performance on individual requirements, tools may be used to keep a complete chain of traceability by automatically tracing simulation test results back to the original requirements. These tools also enable development teams to monitor progress by knowing which requirements have been verified and which need more evaluation.

When the AV stack fails on a requirement, development teams may debug failures or consider altering requirements as discussed earlier. While making requirements stricter often gives the vehicle additional safeguard against error, loosening requirements may be safer in some circumstances (e.g., going above the speed limit on highway lane merge). This example reinforces the importance of defining requirements in the context of the specific ODD.

Applied Intuition’s Approach:

Applied Intuition supports development teams in setting up workflows that can handle the scale required to automatically create scenarios from requirements. Using Applied Intuition’s tools, development teams can programmatically generate functional scenarios, logical scenarios, and concrete scenarios from high-level functional requirements, run large-scale simulations, and programmatically analyze test results. To discuss with or learn more about the requirements and traceability topics from the Applied Intuition’s engineering team, reach out from this link.

.webp)

.webp)