Over the last decade, autonomous vehicle (AV) perception systems have transitioned from experimental and research-driven to being used in production. In many cases, simulations form the backbone of these state-of-the-art efforts and therefore are required to be at the same level of productionization. Production systems require multiple types of simulation to cover the range of events that need to be trained for or tested against. In part 1 of this post, we’ll introduce several different approaches to perception simulation and how they can be used together. In part 2, we’ll discuss the key requirements for these simulation methods.

Established Perception Simulation Approaches

Perception simulations come in various forms and each approach has its own set of trade-offs. At Applied Intuition, we have created a robust and hybrid solution, where real data can be used to the extent that it is available and then, validated synthetic simulation tools can be used to extend into the long tail. We break perception simulation down into three domains, but there are hybrids that can exist between each of these methods.

Resimulation of Drive Logs

Log replay, or what we refer to as resimulation, may be extremely useful to validate against the real-world drive data and test unanticipated failures or ‘unknown unknowns’. For example, a vehicle may encounter an unexpected object such as a fallen mattress on a freeway and safely change into an adjacent lane to avoid the mattress but belatedly. In this situation, resimulation can improve the vehicle’s behavior (i.e. the vehicle’s perception system incorporates long-distance measurements better and transitions to another lane more smoothly). Resimulation allows for capturing both common events as well as some long tail events in the real world. While this approach is fairly fast to set up, the real challenge is in the logistics and cost of scaling the amount of data that can be collected. The usefulness is dependent on the diversity of the datasets you own and is limited to the locations and experiences seen by the vehicle fleet. Variations such as locations in the world, environmental conditions, and object types cannot be modified. There are also times when the perception stack or sensors have been updated to an extent that drive data becomes stale and can no longer be used for the production system.

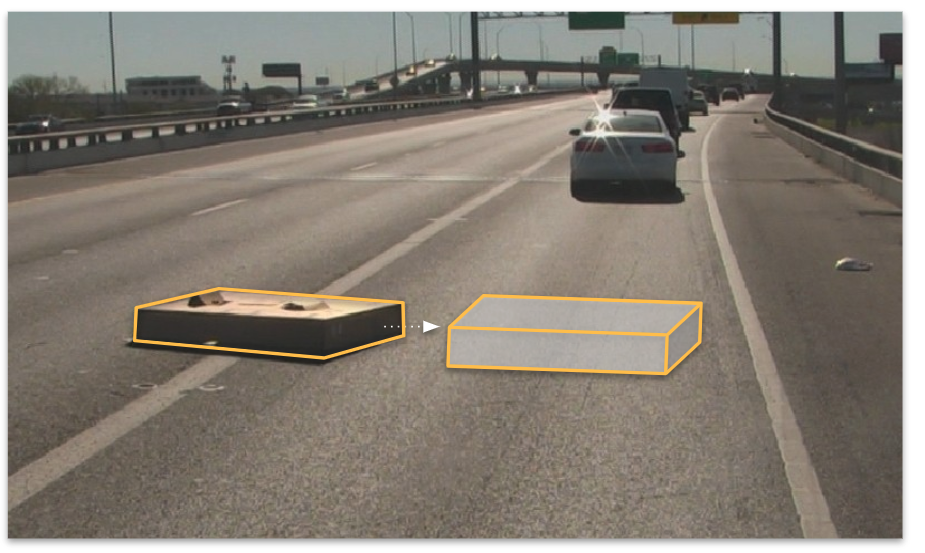

Partially Synthetic Simulation

The use of real drive logs augmented with additional synthetic data is another method of testing additional scenarios to those encountered in the real world. This approach is effectively an augmentation of log streaming with synthetic data where objects can be either moved, added or deleted from the real data. Most of the sensor data from the real scene is retained while enabling a good variety of potential scene variations. This method is referred to as “Actor Patching” in Applied Intuition’s resimulation tool and can be used to add or remove actors from drive data. Using the mattress example from the previous section, we now have the ability to modify the position of the mattress and test how the stack handles variations of the disengage scenario (Figure 1). While object level re-placement of objects for motion planning is common, replacing an object in sensor data is relatively a new method. There is a limit to the amount of divergence that could be sustained before the data is no longer reliable.

Correctly inserting or removing an actor to/from a drive log is not straightforward. Each sensor has its own challenges when considering typical autonomous sensors like camera, radar and lidar. For a camera, inserting an actor with physically correct lighting properties is an ongoing area of research. For lidar and radar, complex interactions such as multipath cannot easily be simulated accurately because there is no environment to interact with. Thus reflections are incorrect and the lighting on the subject and the boundaries between real and synthetic data are often complex to keep artifact-free. The presence of complex occlusions can be especially challenging and would require dense depth information about the scene from the real data. We are exploring state of the art Deep Learning and Generative Adversarial Networks (GANs) methods to solve these challenges, but currently these methods are not ready for production environments. We are committed to enhancing our systems with these methods as they become production ready and it makes sense for our customers from the point of view of performance and scalability.

Fully Synthetic Simulation

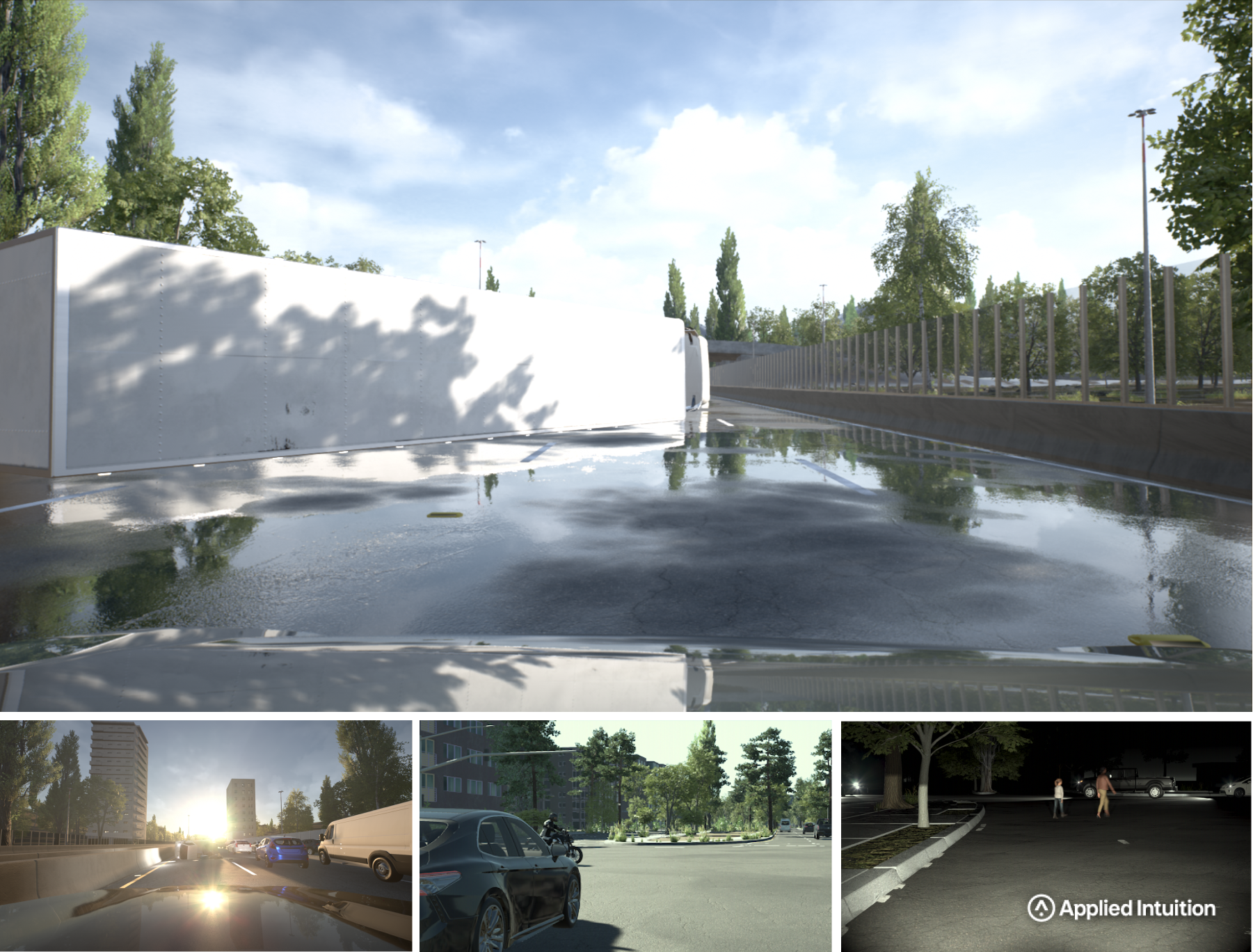

In contrast to resimulation, fully synthetic simulation may be used to create any possible long tail event. We can simulate the same fallen mattress scenario in rainy conditions at night with a variety of thousands of different objects placed in the middle of the road. The longtail can be extended and tested endlessly. While this seems like a logical solution, there is a domain gap between synthetically created and real environments and sensors. We will discuss this point further in part 2 of this blog series.

Physically accurate synthetic data can be used both in training and testing of perception algorithms. Traditional approaches of generating large sets of base scenarios, or recreating synthetic scenes from drive log data can be used as a starting point. Environments are created quickly from many potential forms of map data. Assets are also created procedurally to produce the maximum amount of variation. From there, techniques like Structured Domain Randomization or full Domain Randomization can be used to increase the robustness of algorithms by adding variance that is potentially beyond what's typical in the real world (e.g., more diverse car paints).

Alternatively, development may focus on handling specific rare events. These rare events are often targeted at specific failure modes and fully synthetic simulation can fill in blanks in testing plans where real data is not available. Examples might include an up-close motorcycle, a tipped over vehicle on the road, or vehicles that are rarely encountered (Figure 2). Any data that is too dangerous to gather or rare can be easily created.

Depending on the implementation, fully synthetic simulation could require enormous effort. The capabilities described above have never been possible until the present state of online resources and rendering technologies. Real time ray-tracing combined with validated materials and procedural geometry creation has allowed for relatively small teams to create physically accurate and largely varied simulations. These synthetic simulations are being proven useful and necessary by automakers around the world on a daily basis to find the exact boundary of where and why an algorithm could fail.

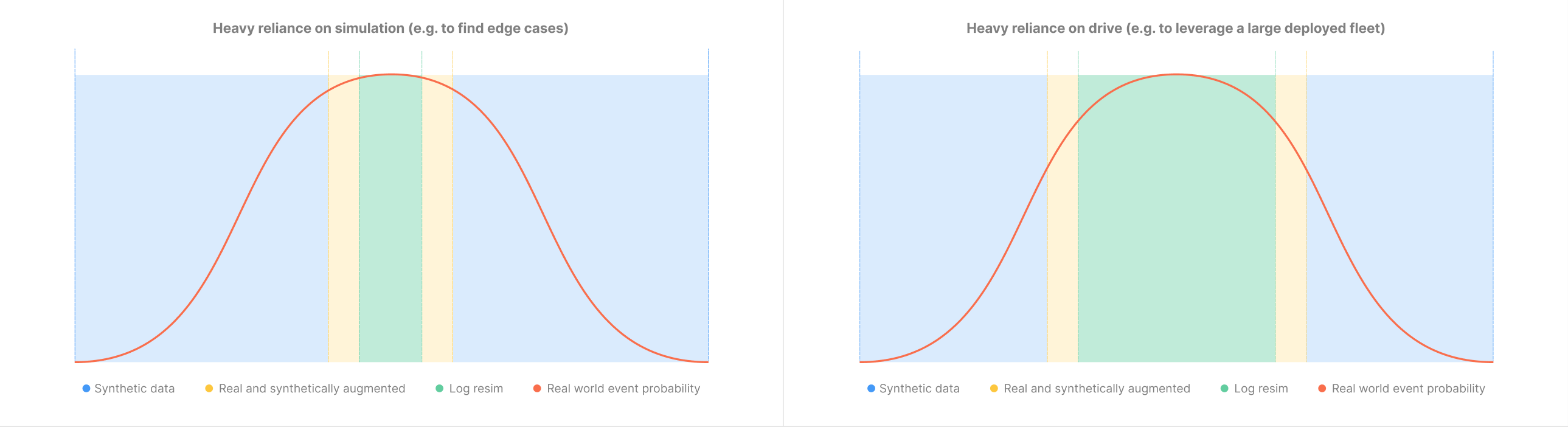

Multivariate Approach Required for Production Autonomy

By utilizing each of these methodologies, we are able to cover the full domain of potential scenarios and variations that are required to test an autonomous perception system. Depending on the given AV system and, specifically, the access to drive logs, we can leverage our tools differently. Figure 3 shows examples of two systems: the left one relies more heavily on synthetic simulations (e.g. to focus on edge cases) and the right one focuses more on resimulation of drives (e.g. to leverage a large deployed fleet).

Conclusion

Perception algorithms and simulation have moved out of research and into production. We’ve described several of the approaches being used at the cutting edge of development, as well as given the core requirements for simulations used with these perception algorithms. This field is constantly evolving and while new methods might pop up over time that are hybrids to some of the simulation methods described, the vast majority of the effort in building up these capabilities is in the productionization.

Applied Intuition’s Approach

Applied Intuition’s perception simulation products supporting all of the above perception simulation approaches are well positioned to support any future developments in this space. We’ve done years of research and development on these approaches, so please reach out to Applied Intuition’s engineering team if you are interested in using these forms of simulation.

.webp)

.webp)