Developing safety-critical perception systems requires an enormous amount of data to cover the range of edge cases encountered in the real world. Labeling and curating datasets from the real-world driving data is a common approach that has been used to train autonomous vehicle algorithms in the industry. Tesla uses this approach extensively and leverages its fleet with online adaptation of labels of interest as discussed by Tesla’s Andrej Karpathy at CVPR 2020. This “variation and control” is an important component because engineers are constantly adapting the ontology and labeling instructions as self-driving vehicles encounter new scenarios that need to be addressed.

There are various limitations to this drive data-driven approach, however, due to scalability limitations, the cost of data collection, and effort required to label information accurately. In this blog, the Applied Intuition team discusses a complementary approach with synthetically created and labeled data that enables a faster, more cost-effective approach for training and developing AV algorithms while focusing on safety.

Current Approach to Data Labeling and Its Challenges

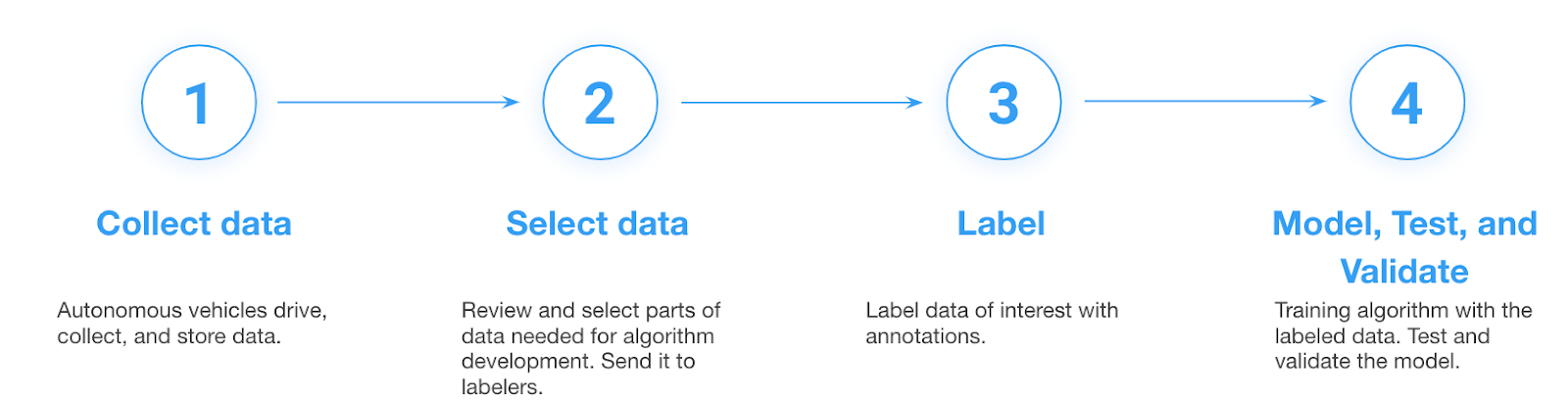

A typical approach to creating labeled data is shown in Figure 2. It is a labor-intensive process in which the test drivers drive a vehicle loaded with sensors either in manual mode or automated mode. Software running on the car records the raw sensor data and software outputs across perception, planning, and controls. Specific development vehicles may be needed because many production vehicles do not have sufficiently accurate sensors to capture this data. Once data is ingested, selecting the data to be labeled and analyzed is also a laborious task requiring careful extraction of specific interesting events to send to labeling companies for extracting annotations of interest (you want to minimize the amount of data in the dataset to keep labeling costs down). Sometimes, this includes finding specific edge cases (e.g., a flying plastic bag on a freeway) in the drive logs. Each update in sensor configuration on the vehicle may also require re-collecting and labeling large amounts of data.

While labeling may be the only sure way to prepare clean data needed for AV algorithm training, the biggest downside of this approach is the investment required for sufficient scale. Test drivers may need to drive hundreds or thousands of miles before they encounter an edge case. A company like Tesla has over one million autopilot production vehicles worldwide that are collecting long-tail datasets such as stop signs in different languages, placements, relevance to the ego, etc, on behalf of the company. Most OEMs do not have sufficient quantities of production vehicles with capabilities to provide such datasets. Even if there were a large volume of drive data available, there is still no guarantee that the required data is available in the dataset. In this case, specific driving campaigns are needed to collect this data, adding costs and time delays.

Another consideration is the availability of conditions. At the time of writing this blog, the western United States is seeing extreme weather conditions, tinting the sky orange, or even red in certain case (Figure 3). If one does not have vehicles in the area, it may take years for these conditions to happen again, leading to potential biasing issues with this type of data being underrepresented.

Further, AV developers are always looking for new snippets of data and significant infrastructure is needed to efficiently query the data. A lot of this querying assumes that a specific tag or annotation is already available. But this may not be the case if the annotation was not considered previously. Finally, the cost of labeling is substantial and many approaches are manual and require humans in the loop. There are many chances for inaccuracy due to labelling errors or incomplete information (e.g., a car partially blocking another car).

Using Synthetic Data and Its Benefits

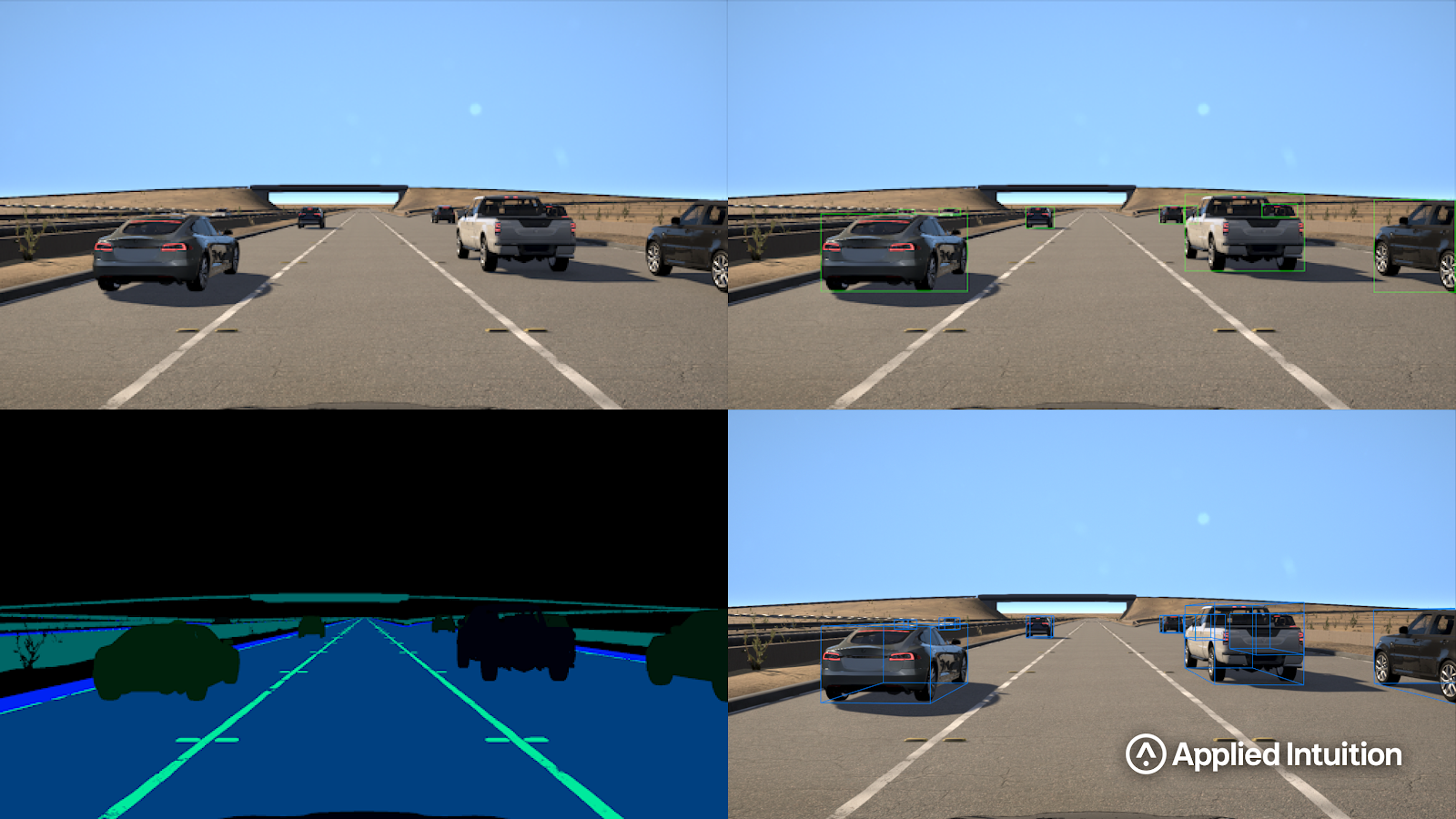

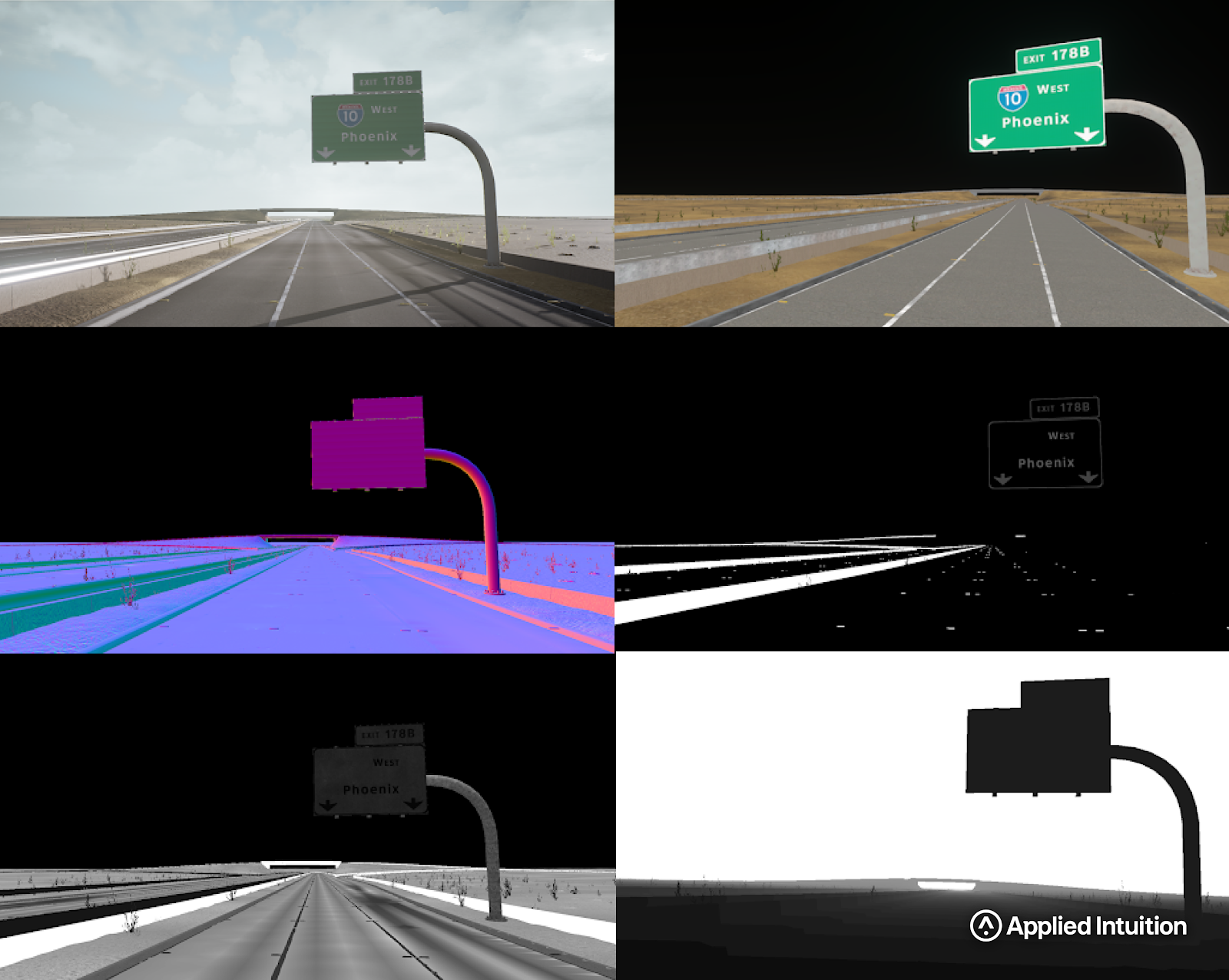

Synthetic data provides an alternative approach that is more scalable and accurate. Even though synthetic data is created from simulations, the ground truth information (e.g. semantic labels of vehicles or text on traffic signs) is available precisely. Simulations can also provide precise information such as albedo, depth, retro-reflection, and roughness of every object in a scene (Figure 4). Additionally, pixel perfect object masks and semantic instance labeling exist. As a result, any of these annotations could be created automatically and no manual labeling of sensor data is needed. While custom annotations might require a small amount of software to extract the right data from the real world, it is a one-time fixed cost enabling new label classes.

Another key benefit of synthetic data labeling is that one can create multiple variations without driving in the real world or counting on luck. Synthetic data also allows you to focus on specific objects of interest. With the right procedural set-up, one could simulate millions of example traffic signs in a few hours. These could include examples under different lighting conditions, object locations, occlusions, and degradations (rust, oil, graffiti). In this way, synthetic data could be used in a complimentary fashion to real data. Long-tail events that are identified in the real data could be used as a starting point to create thousands of variations around that event.

Variation is also important geographically. While a test vehicle would have to drive on foreign streets to encounter road signs with the country specific modifiers or drive hundreds of hours to find a scene where a sign might be half hidden by a school bus, these conditions could be created instantly using synthetic dataset (Figure 5). With the wide variety of scenarios that could be produced synthetically, it is possible to test algorithms based on edge cases (Figure 6). This post describes how Kodiak Robotics, an autonomous trucking company, uses synthetic simulation to train algorithms and test Kodiak Driver’s ability to handle edge cases comprehensively.

Another key use case is getting ground truth for data that is neither available from sensors nor easy to manually add. A common example is robustly extracting depth from a monocular or stereoscopic camera system. Real-world data does not include depth per pixel and cannot be computed accurately or annotated by hand.

Requirements for Synthetic Data to Be Useful

Sensor data

For synthetic data labeling to be useful for training and testing AV algorithms, both synthetic sensor data and annotations should meet certain criteria. As discussed in the earlier blog post about sensor simulation, large amounts of synthetic sensor data used for AV development must be created inexpensively and rapidly (in days). The synthetic sensors should also be developed to obey the fundamental physics inherent for each sensor type. The level of a fidelity that is modeled is always a key consideration. There is a trade-off between the allowed domain gap (how differently synthetic and real data are seen by perception algorithms) and the speed of collecting the data. The domain gap could also vary depending on the sensor being simulated, as well as the asset types and environmental conditions being considered. The key consideration is being able to quantify the domain gap and then using that information to inform how the synthetic data could be used. As an example, Figure 7 shows how a synthetic lidar sensor reacts to wet conditions on the road. The impact on the lidar returns, both at the ground level and due to the spray from vehicles, is seen.

Environments

The next consideration for synthetic data is the variation of environments and the materials used within those environments. Environments should be generated quickly using real maps and data as shown in Figure 8. The ability to build up the world rapidly is reliant on using procedural generation. Being able to simulate any geographic region around the world is another incredible advantage for synthetic data over real data. While different locations are easily created in simulation, synthetic data could be repetitive if not set up correctly. Understanding the impact of repetition on perception algorithms and getting the synthetic data to have the same types of variations as the real world are currently a focus area. The variation must be considered both at the macro-level of how a synthetic road surface might change over 1km stretch but also at the micro-level of each material used in the environment.

The importance of physically-based rendering materials was discussed in the previous blog, but typically many of the textures that make up these materials are smaller scans of a real surface. Creating blends and variations of these materials to introduce more variation in the synthetic data is critical in both training and testing.

Annotations

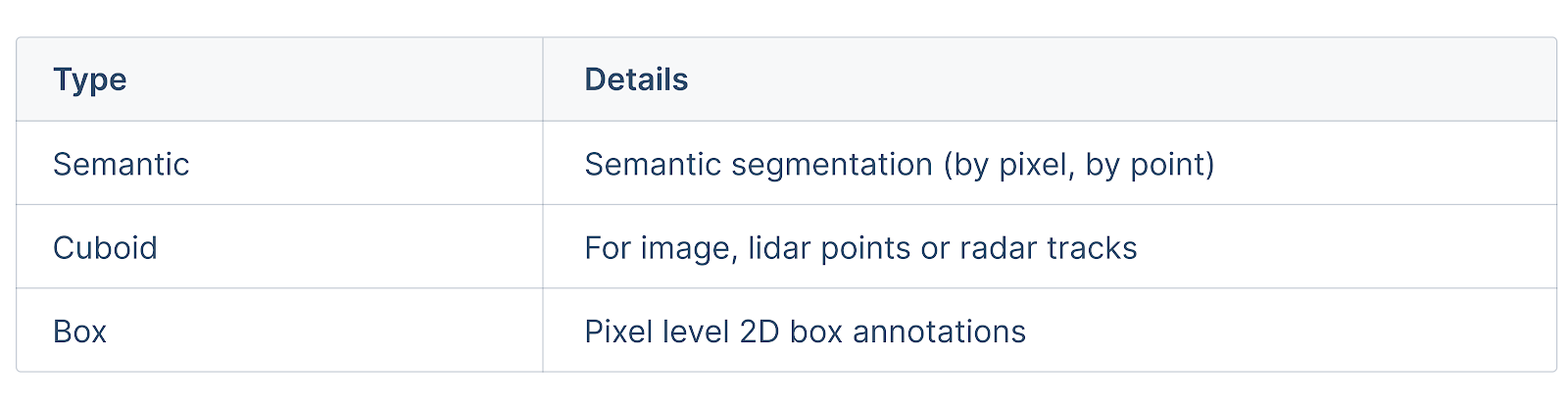

The required data annotations depend on both the use-case and the perception algorithms. Looking at the available annotation from data collection in the real world, the typically available annotation types are shown in Table 1.

For synthetic data, in addition to producing the same annotations as the real data, there is significantly more ground truth information that could be conveyed in the collected data. The ground truth data is also always produced at a pixel or point perfect level. Finally, both the sensor data and the annotations could be conveyed in any frame of reference (world, ego, sensor, etc…).

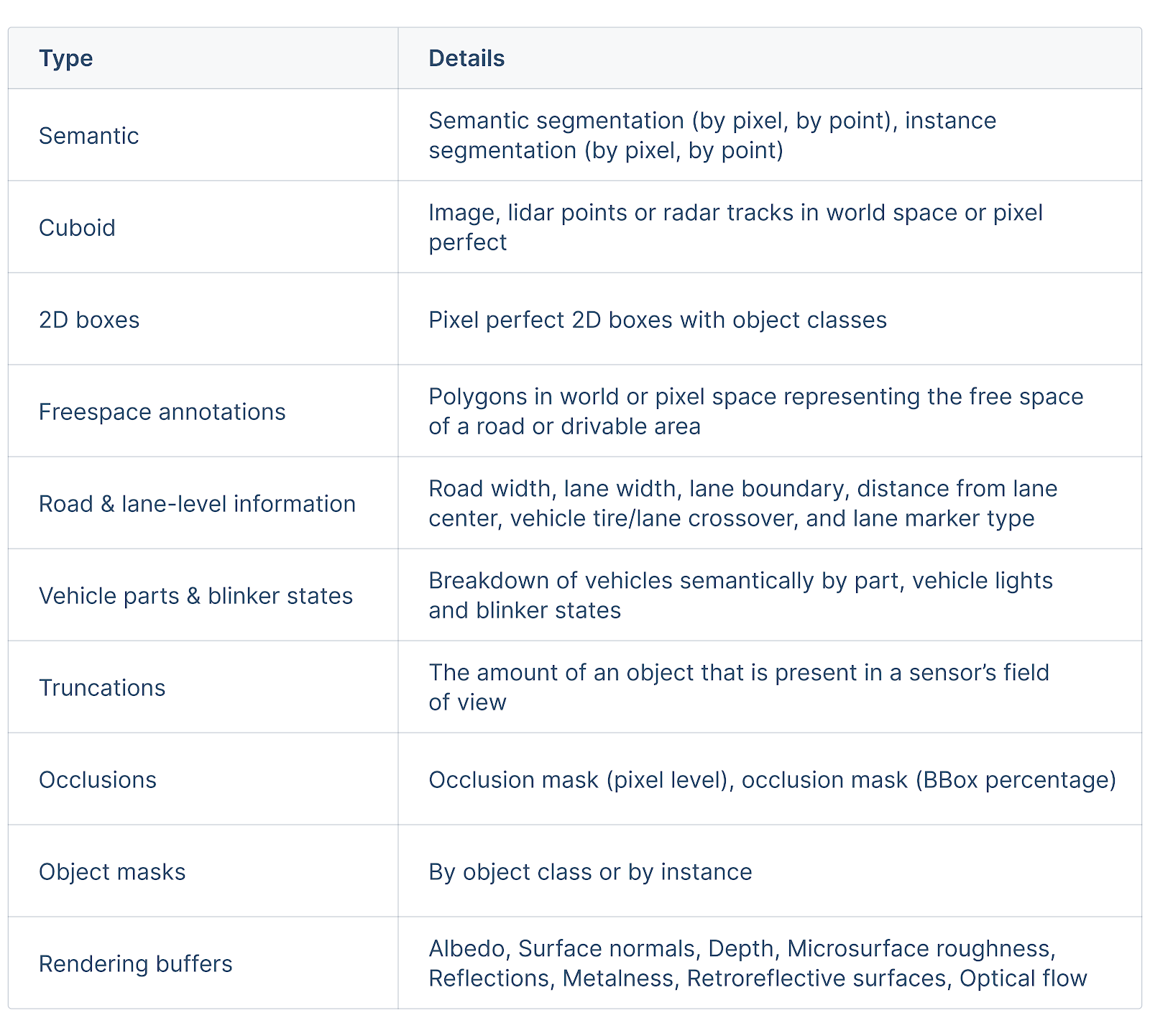

The following standard annotation types for data generated from simulations are shown in Table 2. In addition, many custom formats and data types are supported.

Using these additional forms of ground truth information is a huge driver to the speed of algorithm development. The scale of the available data, the quality, and the amount of information stored in the ground truth data create a faster feedback cycle to making engineering choices.

Applied Intuition’s Approach to Synthetic Data Labeling

The Applied Intuition team has developed a robust simulation engine capable of programmatically creating high-fidelity synthetic data and deterministic results. Our library of synthetic datasets is vast to support use cases specific to each industry and is equipped with a wide range of off-the-shelf annotations. If you’re interested in discussing more about annotations using synthetic data, get in touch with our engineers!

.webp)

.webp)