As the field of autonomous vehicle development advances, the need for powerful yet accessible software tools has become increasingly apparent. Applied Intuition’s platform, designed for leading automotive manufacturers, originally offered a robust scenario editor that enabled engineers to design and simulate complex scenarios through a code-centric interface. Power users and developers can design and simulate dynamic scenarios in a WebGL-rendered 3D scene, using a code editor or directly interacting with the scene.

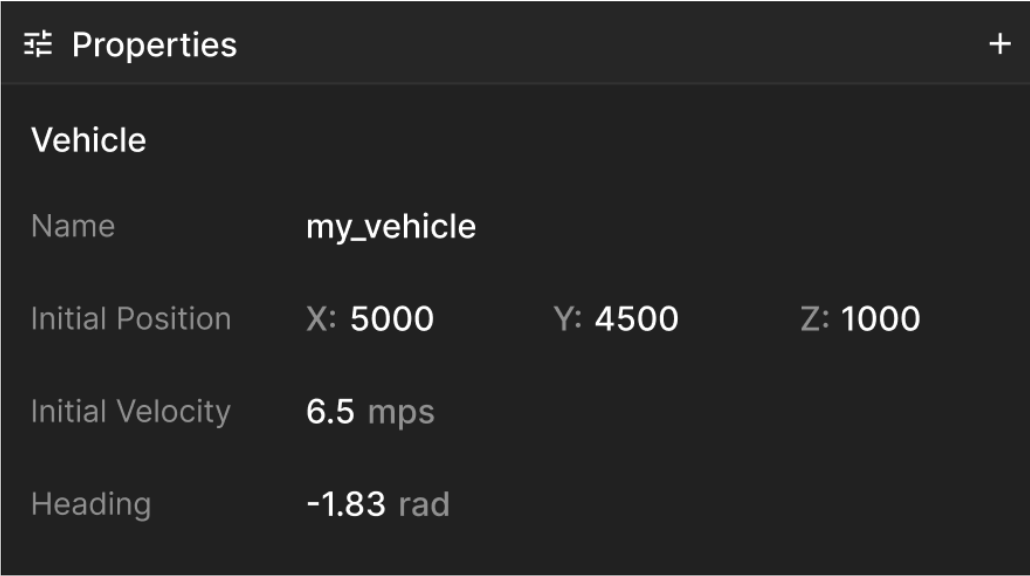

This scenario editor was powerful and enabled a variety of different use cases for our customers. However, with a rapidly expanding and diverse customer base, it became clear that a more intuitive, no-code workflow was essential. The challenge lay in easily creating a graphical user interface (GUI) that could match the precision and feature richness of the original code-based tools while remaining adaptable to dynamic data models.

This post explores the journey of transforming a developer-focused scenario editor into a dynamic, heuristics-based, auto-generated GUI. It details the architectural decisions, technical challenges, and key lessons learned in building a scalable and maintainable no-code interface. The experience offers insights relevant to product managers, engineers, and designers seeking to balance functionality with accessibility in complex software systems.

The Problem: Bridging Code and GUI Workflows

We initially started with just a text editor, offering customizability and flexibility to power users. However, we recognized the need to make this tool more accessible to a broader and less technical audience. The initial GUI workflows lacked the precision and feature richness of code-based workflows. On top of the poor user experience, the legacy GUIs were tough to maintain and did not feed into a cohesive experience.

To address this, we created a GUI workflow that offers all the capabilities of the code-based workflow without sacrificing usability or performance.

Beyond the primary requirements, we also needed to account for several additional considerations:

- Allow ordering/customization of data: We should allow specification of whether only a set of particular nodes should be customized/ordered or whether all nodes of a particular type should be customized in a particular way.

- Advanced support around components: A common use case is converting a text field to a dropdown, with menu items fetched by an API call. These custom components should still have all the capabilities and functionality of the default components, but could be elevated to increase usability of particular portions of the UI.

- Editable data validation parameters: One of our biggest pieces of early feedback was lack of granularity with validation messaging in the new GUI. We knew that we had to embed this validation at each level so that users knew exactly where they needed to edit to fix errors in their scenario. This involved changes to existing backend validators to give jsonpaths attached to each validation message.

- Usage metrics: To inform further development of our editor, we wanted to enable easy tracking of which parts of the GUI were being used. With this, we could use a json path to identify what edit operations were most prominent in the UI.

The Solution: Auto-Generated GUI from Data Schemas

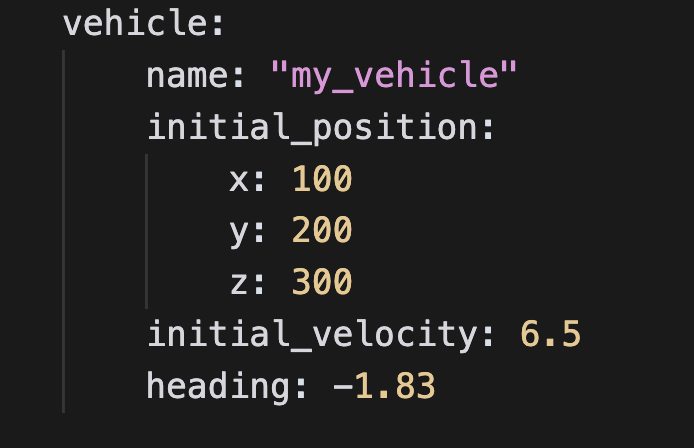

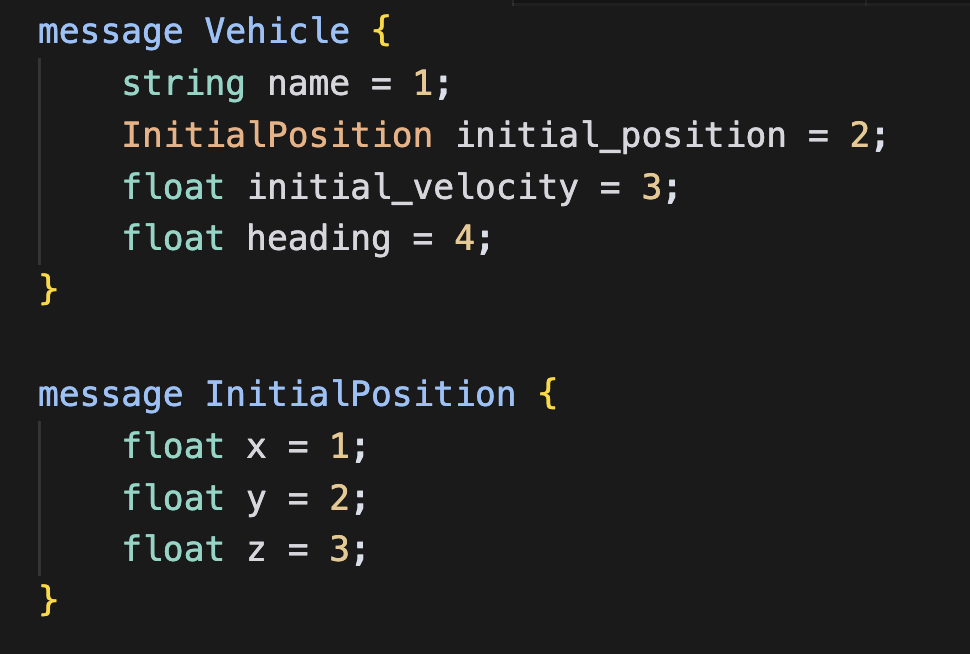

To achieve this, we built a powerful library that automatically generates a GUI from Google Protocol Buffer (protobuf) messages. This system creates specific UI components tailored to each field type within the protobuf descriptor, enabling users to interact with and edit data seamlessly through the GUI. The underlying architecture is built with a clear separation of concerns, composed in multiple layers, each addressing specific responsibilities.

Accessing protobufs from the frontend

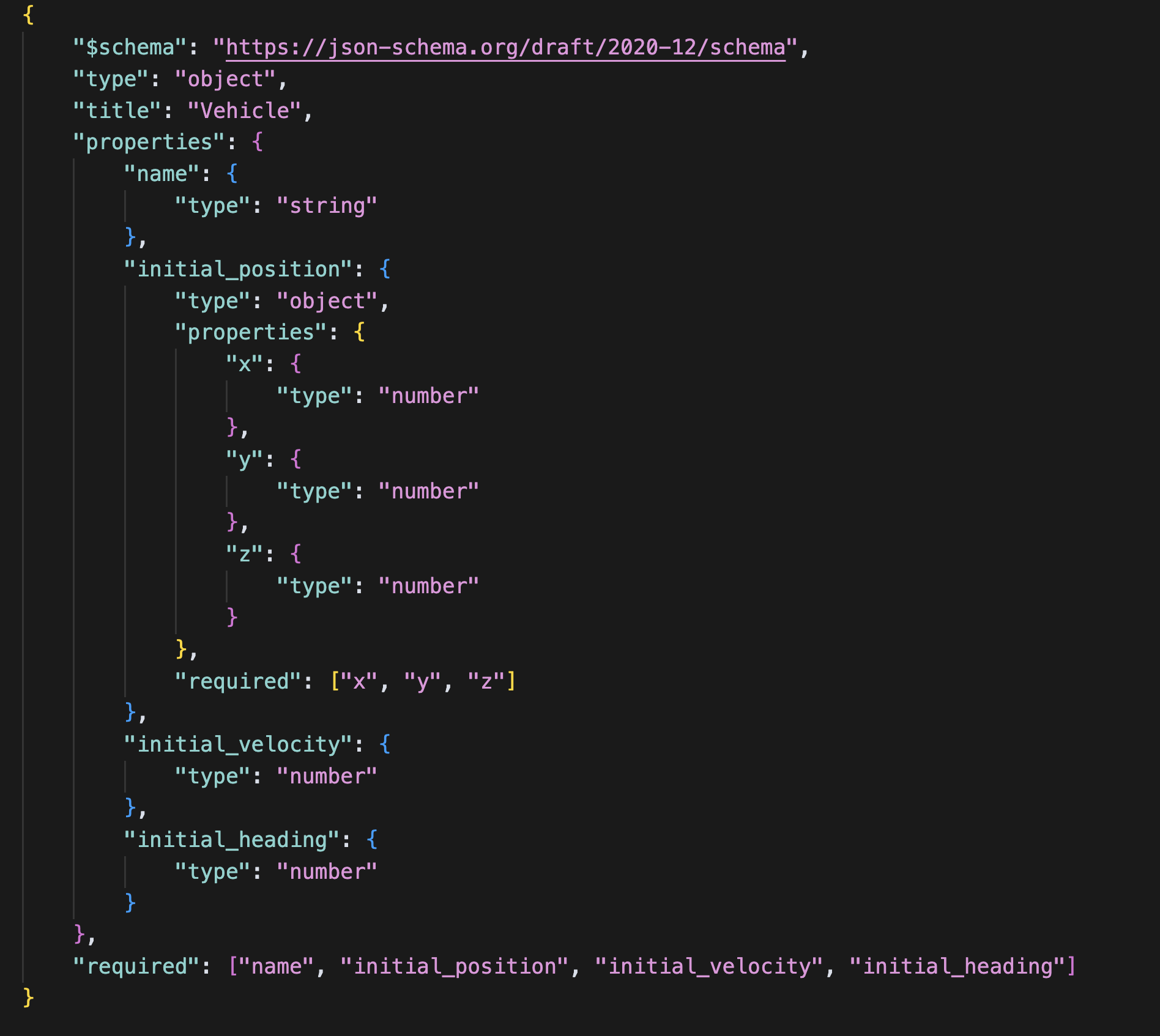

Building this protobuf-to-GUI generation library required accessing the protobuf descriptor directly on the frontend, but this is not possible by default. To load protobuf descriptors into the frontend, we used a go library (protoc-gen-jsonchema) to generate JSONSchema representations of our protocol buffers at build time. JSONSchemas are just JSON files that adhere to the JSONSchema specification, so they can be imported in the frontend directly.

The elegant piece here is that whenever one of our engineers makes a change to a protobuf message definition, such as adding a field, our build system will automatically pick up this change and update the JSONSchema for the frontend to consume. This enables maintaining a single source of truth—the protobuf descriptor.

Layer 1: The protobuf descriptor graph

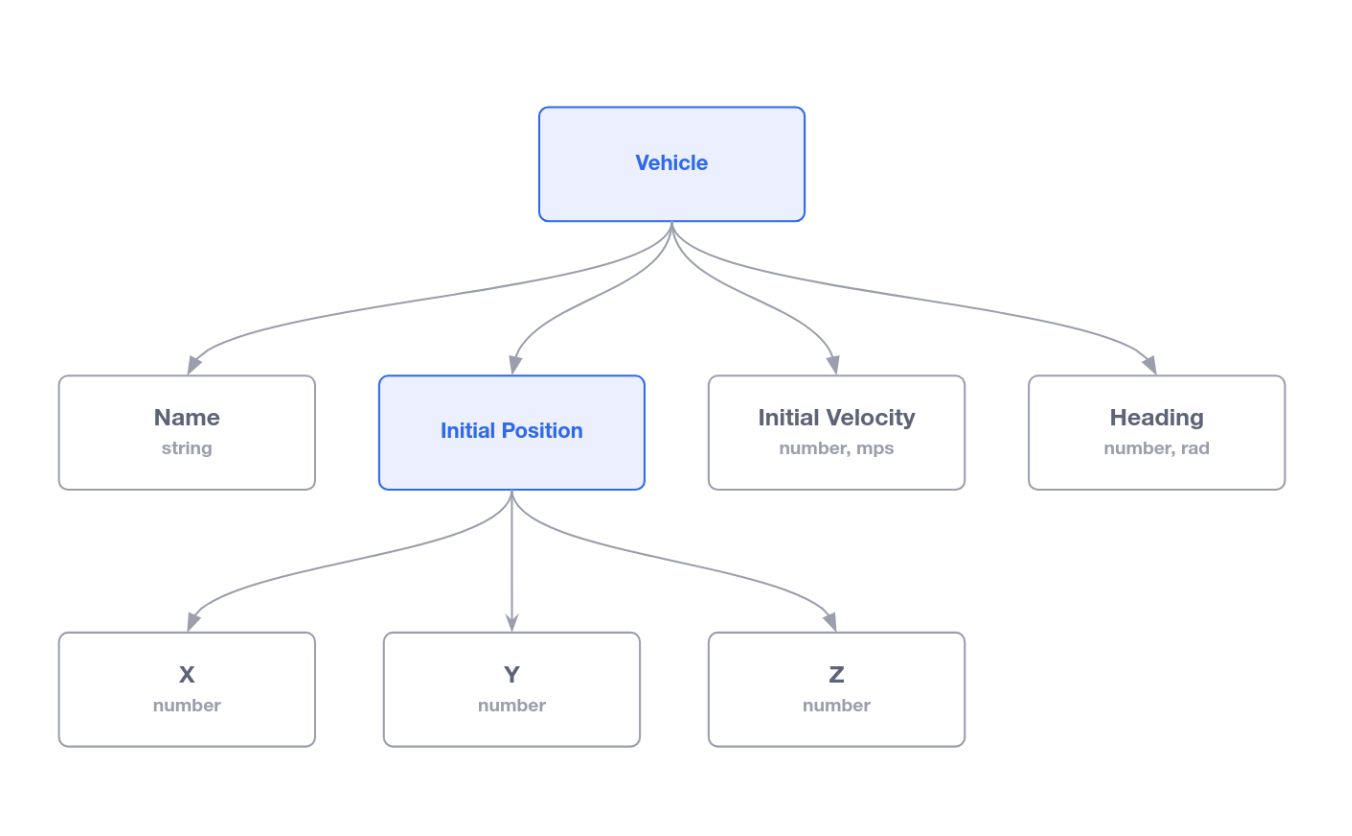

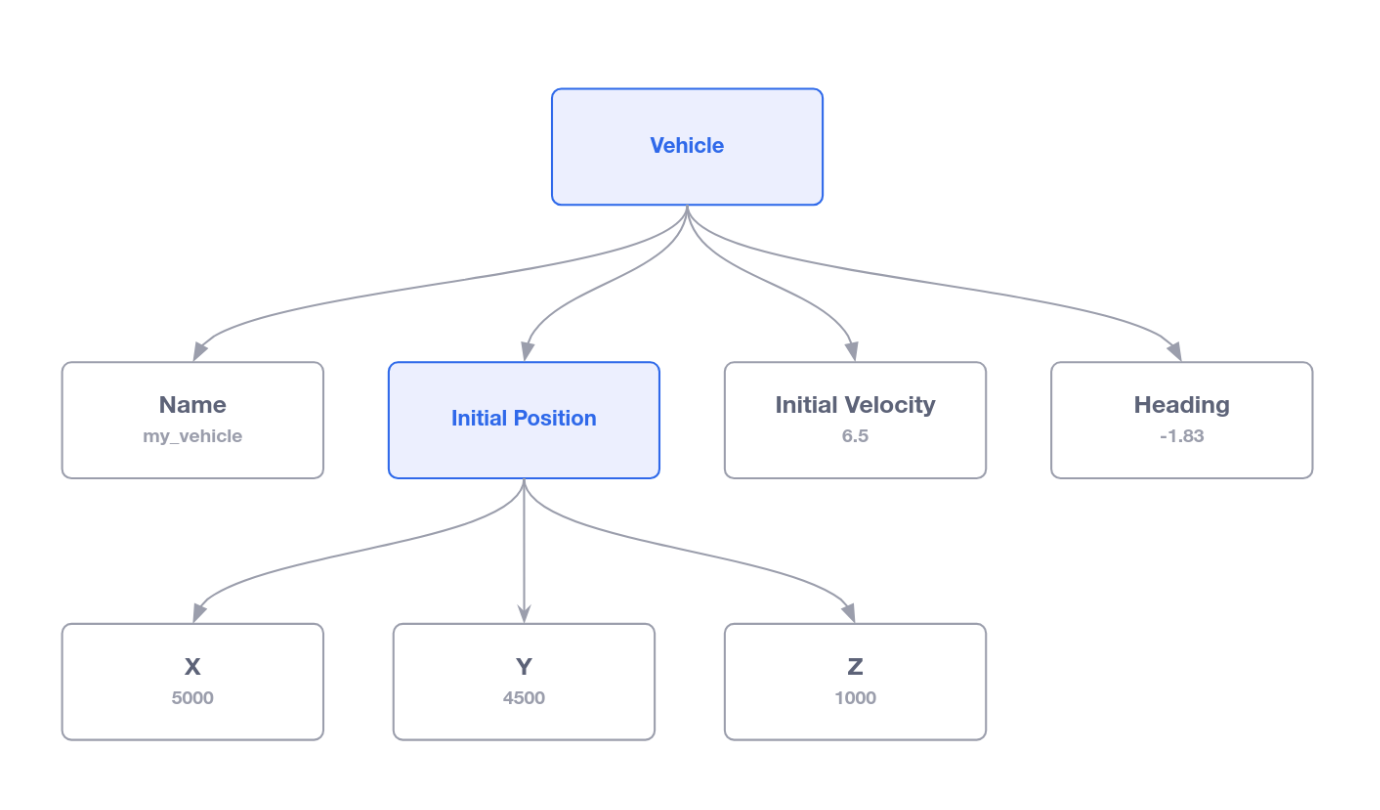

Once the JSONSchema exists, we load it into the frontend and construct a graph representation of the protobuf descriptor: the protobuf descriptor graph.

At the lowest level, we developed the protobuf descriptor graph, which indexes all protobuf messages and provides information about the protobuf message definitions such as field names and types, selection options for oneof fields, and additional metadata. This layer acts as the foundation, offering a reliable and consistent way to interpret protobuf schemas. By keeping this layer focused purely on schema interpretation, we ensure that it remains stable and unaffected by changes in higher-level application logic or UI requirements.

The descriptor graph’s modularity enables applying it to use cases beyond the scenario editor. For example, we were able to enhance the autocompletion abilities to the code editor by introspecting on the field names available on protobuf message and the options available on enum and oneof fields. We were also able to inspect whether certain fields were deprecated based on the protobuf field options, and hide them from autocomplete to reduce the chance of utilization of these deprecated simulation features. We also were able to provide a hover documentation per field by inspecting the description generated by protobuf comments.

Layer 2: The protobuf message tree

Building on this, the Protobuf Message Tree layer stores the current values for each field or message node. It acts as the core data model, reflecting the user’s interactions and maintaining synchronization between the UI and the underlying data. By isolating state management in this layer, we’ve made the system more maintainable, as changes to how data is stored or processed don’t impact other parts of the architecture.

Similar to the protobuf descriptor graph, the modular nature of the layer allows us to create many different UI components independent of this autogeneration library that still reference the same underlying data model. This means that the only thing needed to generate the components with filled-in values is to update the data model, the Protobuf Message Tree, with the intended state.

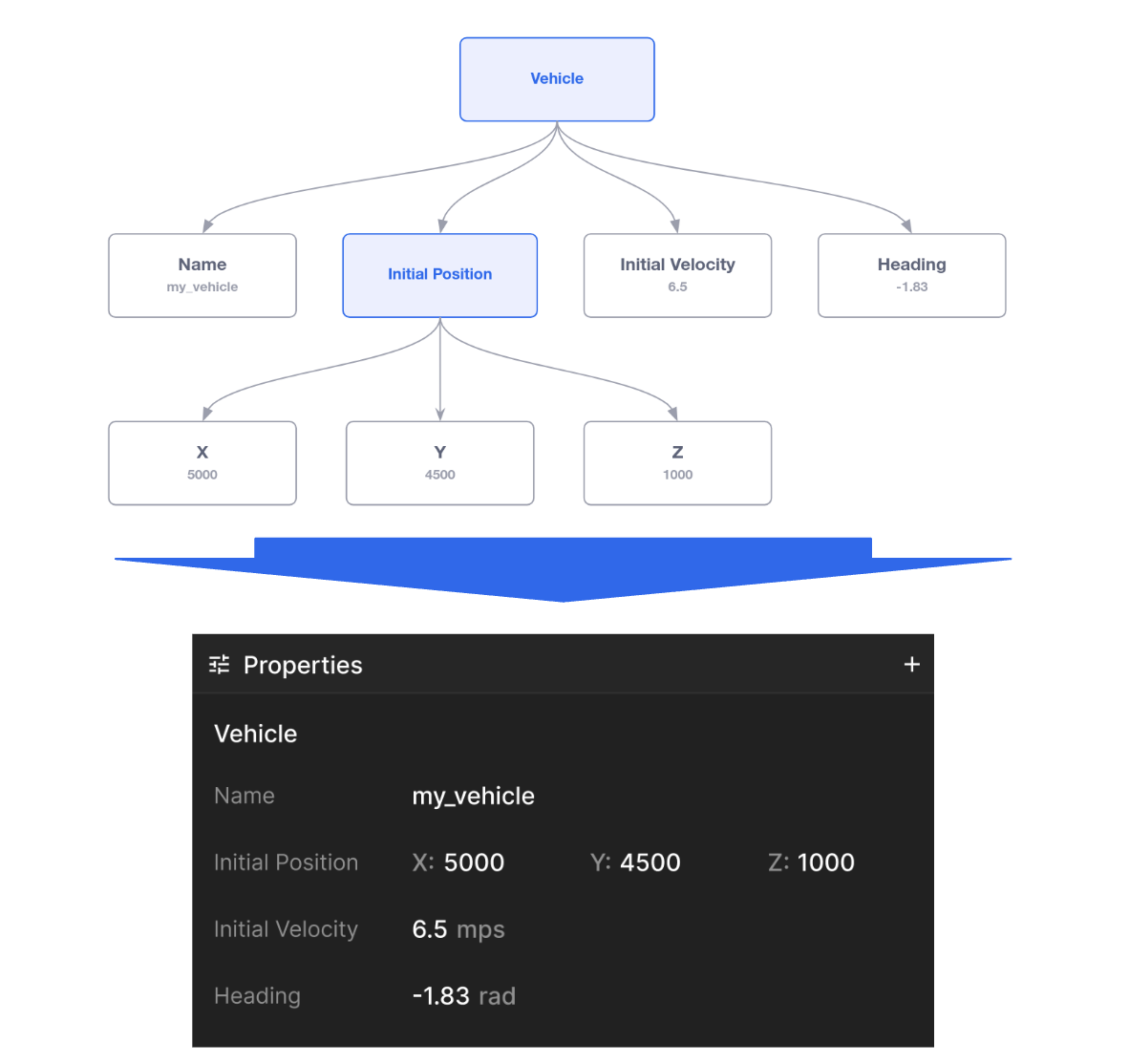

Layer 3: The Proto GUI Tree and Proto GUI Editor

The highest layer is the Proto GUI Tree, which translates the data and schema information into a format specifically designed for rendering the user interface. This layer introduces abstractions for grouping, restructuring, and organizing fields and messages to create a user-friendly and flexible UI. The separation of concerns ensures that product and UI decisions—such as how fields should be grouped or what components should be displayed—are isolated in this layer. This design minimizes the need to modify the lower layers when making changes to the UI, reducing the risk of unintended side effects and speeding up development cycles.

Finally, the Proto GUI Editor React component consumes the Proto GUI Tree and dynamically generates the actual UI. This modular structure allows us to iterate quickly on UI improvements, tailoring the user experience without touching the foundational data-handling logic.

Build vs. Buy

Before investing in building this framework, we deeply considered whether it was appropriate to build this ourselves, or re-purpose an existing library. The main library we considered was react-json-schema-form, which initially seemed to fit most of our use cases but ultimately fell short when it came to scaling for our requirements. One major limitation was its inability to handle recursive schema definitions, which are central to our complex scenario language. Additionally, the library preloads the entire JSON schema upfront, which poses significant performance challenges for our use case where only specific subsections of the schema might need to be rendered at a given time. This lack of support for rendering sub-sections while leaving other parts collapsed made it unsuitable for our needs.

Furthermore, react-json-schema-form offered limited configurability and extensibility. For our scenario editor, we needed a high degree of control over how fields and messages were rendered and displayed. The library’s APIs imposed restrictions that would have constrained our ability to implement features like custom validation, dynamic reordering, and tailored user experiences. Ultimately, these limitations led us to build our own solution that was purpose-built for scalability, flexibility, and the unique requirements of our scenario editor.

To the Future

This new GUI editor has served as a framework not only for the scenario editor, but also many other editors such as the GUI editor for chart configuration and another for general user level configurations. To this day, we continue to look for improvements to this framework to further improve usability and accessibility of all our products while maintaining engineer velocity.

Join Us

If building innovative, user-friendly interfaces for complex technical domains excites you, Applied Intuition is hiring! Explore our open frontend engineering roles on our careers page and help shape the future of autonomous vehicle development.

.webp)

.webp)